With the disproportionate release of much more accessible and advanced versions of artificial intelligence, some risks have emerged. Deepfake attacks, threatening everyone including cryptocurrency investors, have become much more simplified. So much so that even children aged 12-13 can spend some time preparing content suitable for these types of attacks.

Attacks Have Become Easier

Years ago, we saw that even very young children could plan and implement complex attacks as the access to Trojan and similar Rat viruses became easier. The number of attackers who could access everything including the camera, keyboard input, and stored data of a victim by simply opening an “exe” file increased, and so did the scale of losses caused by these attacks.

Now, in the next stage of the digital age, we are entering a period where AI-supported attacks have become much easier. At this stage, a high school student could produce content just to joke with friends using their teacher’s voice and image. Or they could target their friends and teachers.

From this perspective, as attacks become easier, the risk grows even larger. Naturally, we are entering a new era where even attacks targeting cryptocurrency investors with personalized scenarios will become widespread.

Beware of Deepfake Attacks

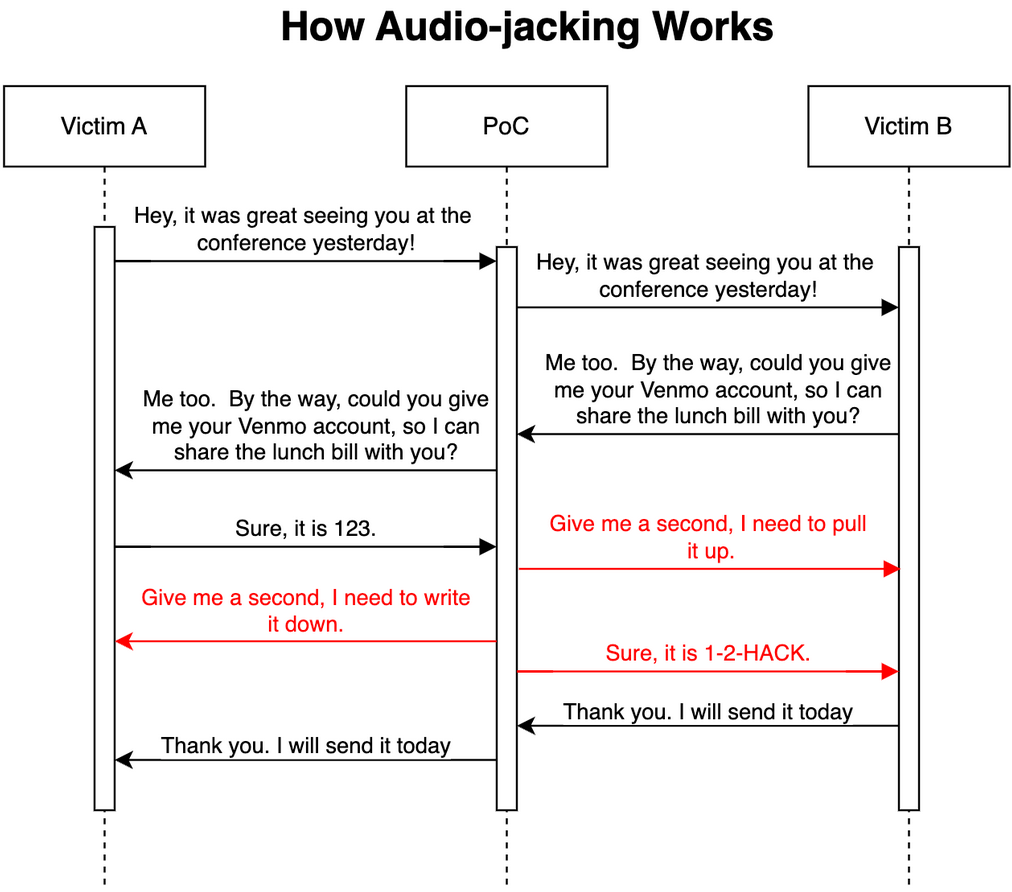

IBM Security researchers recently discovered a technique that is “surprisingly and frighteningly easy” to manipulate and alter live conversations. The attack, named “Audio-jacking,” relies on a class of artificial intelligence known as generative AI, which includes OpenAI‘s ChatGPT and Meta’s Llama-2, as well as deepfake voice technology.

In the experiment, the artificial intelligence was able to catch designated words during a phone conversation and send them back to the other party manipulated in the same tone. For example, your boss calls you and instructs you to send money to an address over the phone. When the conversation reaches the address part, the attackers can trap you by communicating a different location as if your boss had pronounced it.

A recent incident in Asia involving an employee was quite alarming. The employee receives a call from the Finance Director asking to transfer $25 million to a given address. The money is sent to the address, and it is later revealed that the Finance Director never made such a call.

According to IBM Security’s blog post, the artificial intelligence was able to successfully give the potential scammer’s bank account (for the transfer of money) to the other party in the experiment.

“Creating this PoC (proof-of-concept) was surprisingly and frighteningly easy. We spent most of our time figuring out how to capture sound from a microphone and how to feed it to the generative AI. We only need three seconds to clone an individual’s voice.”

Türkçe

Türkçe Español

Español